How Model Context Protocol Boosts AI Agent WorkflowsHow Model Context Protocol Boosts AI Agent Workflows

MCP: A standardized, context-rich framework to accelerate how intelligent AI agents access the tools and resources necessary to becoming a digital labor platform.

On November 25, 2024, large language model (LLM) provider Anthropic open-sourced its Model Context Protocol (MCP). MCP provides a standardized way to connect an AI model, like the Claude family of models which Anthropic provides, to different data sources and tools.

MCP has been characterized by Anthropic and many others as a “USB-C port for AI applications.” Just as USB-C is a standardized way to connect a laptop to an external mouse and keyboard, MCP is a standardized way to connect an AI model (or AI agent) to tools and resources.

Per the MCP user guide, MCP helps developers connect LLM-based AI agents to tools (pre-defined interfaces to interact with external capabilities), resources (e.g., database records, file contents, API responses) and prompt templates (pre-written prompts to help guide complex tasks). As Anthropic wrote, one of the key benefits of MCP is that rather than “maintaining separate connectors for each data source, developers can now build against a standard protocol.”

LLMs support a feature called “function calling,” which allows the LLM, or more specifically an AI agent or a simpler chatbot built on that LLM, to connect to an external tool or data source. Per this explainer article, an AI agent cannot respond to a “what’s the weather today” request because that information is not in its trained dataset. But it can “call” an external weather service API to obtain the weather and then provide it to the end user.

Without MCP, developers would have to create separate connectors for each data source or application they would want the AI agent to access. With MCP, they write once, and their AI agent can speak with any MCP-compliant server. Per Anthropic, MCP follows a client-server architecture. Per this Cloudflare tutorial, a MCP Server exposes specific capabilities to MCP Clients which are embedded in a MCP Host which is an AI-powered application. Essentially, the Host is the interface for the end user.

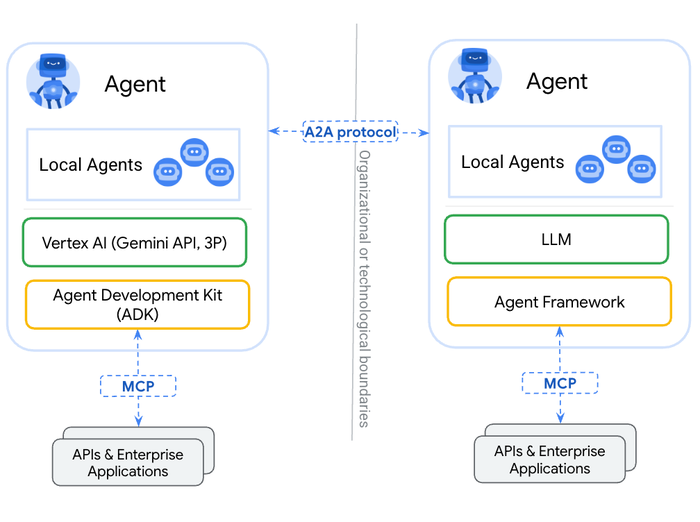

The Agent2Agent protocol (A2A) and MCP are complementary protocols. A2A enables AI agents to talk to each other. MCP allows AI agent to discover tools, APIs and resources. The following graphic illustrates how A2A and MCP work together.

Source: The A2A Project

As support for MCP increases, more software vendors will create MCP-compliant servers through which they will expose the capabilities of their solutions. Many MCP servers already exist including PayPal, Asana, Twilio, Box, ElevenLabs and others. Other companies, such as Microsoft, Anthropic and OpenAI have incorporated MCP support into their products.

In summary, MCP makes it easier to provide AI agents with access to the resources they need to act on behalf of an end user or other system. A2A standardizes how AI agents communicate and collaborate. Together, MCP and A2A move AI agents closer to becoming the digital labor platform advocated for by many of the same vendors promoting those two standards.

About the Author

You May Also Like